Donny (Yuedong) Chen is currently a Singapore-based Research Scientist building 3D and 4D foundation models. ALL VIEWS ARE SOLELY HIS OWN (DISCLAIMER).

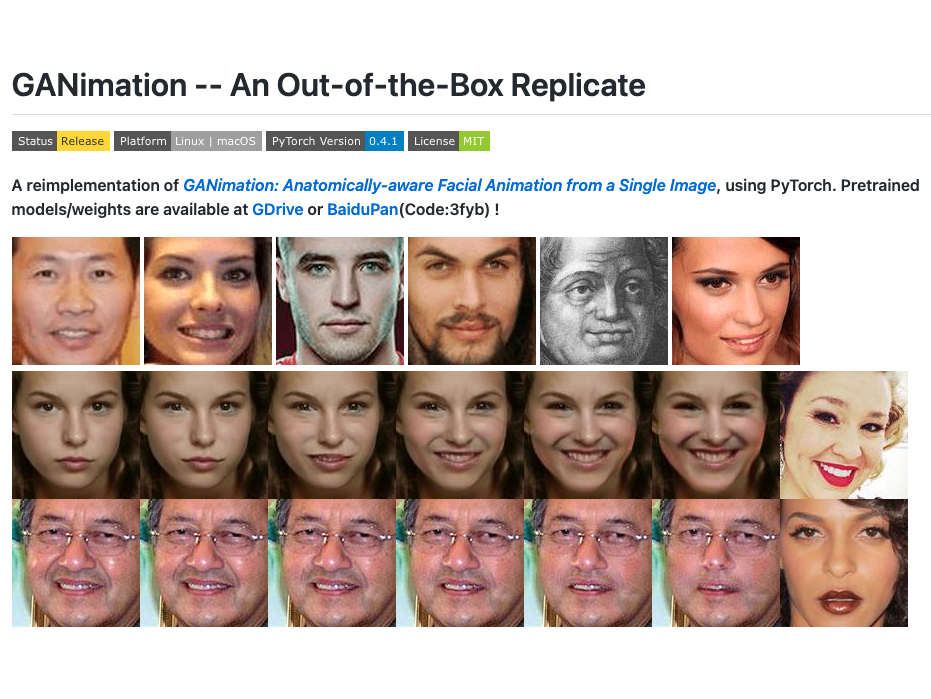

Previously, Donny obtained his PhD degree at Monash University under the supervision of Prof. Jianfei Cai, Prof. Tat-Jen Cham and Dr. Bohan Zhuang. His PhD research focused on Feed-Forward Novel View Synthesis from Sparse Observations. During his PhD, he collaborated closely with Haofei Xu, Prof. Marc Pollefeys, Prof. Andreas Geiger, Dr. Chuanxia Zheng, Prof. Andrea Vedaldi and Dr. Qianyi Wu.

Before that, he was a Research Assistant at the Institute for Media Innovation, NTU (Singapore). During this period, his research focused on enhancing emotion recognition by incorporating human prior knowledge.

His academic journey began with the completion of both his MEng and BEng degrees at Sun Yat-sen University, where he majored in Software Engineering. Additionally, he spent a semester as an exchange student at National Chi Nan University (Taiwan) during his BEng studies, collaborating closely with David Cheng.

🤖🧠👌🏼 He prefers simple yet effective solutions

* indicates Equal Contribution

Depth Anything 3: Recovering the Visual Space from Any Views

Tech Report 2025

Haotong Lin*, Sili Chen*, Jun Hao Liew*, Donny Y. Chen*, Zhenyu Li, Guang Shi, Jiashi Feng, and Bingyi Kang*

Revisiting Depth Representations for Feed-Forward 3D Gaussian Splatting

3DV 2026

Duochao Shi*, Weijie Wang*, Donny Y. Chen, Zeyu Zhang, Jia-Wang Bian, and Bohan Zhuang

ZPressor: Bottleneck-Aware Compression for Scalable Feed-Forward 3DGS

NeurIPS 2025

Weijie Wang, Donny Y. Chen, Zeyu Zhang, Duochao Shi, Akide Liu, and Bohan Zhuang

Explicit Correspondence Matching for Generalizable Neural Radiance Fields

TPAMI 2025

Yuedong Chen, Haofei Xu, Qianyi Wu, Chuanxia Zheng, Tat-Jen Cham, and Jianfei Cai

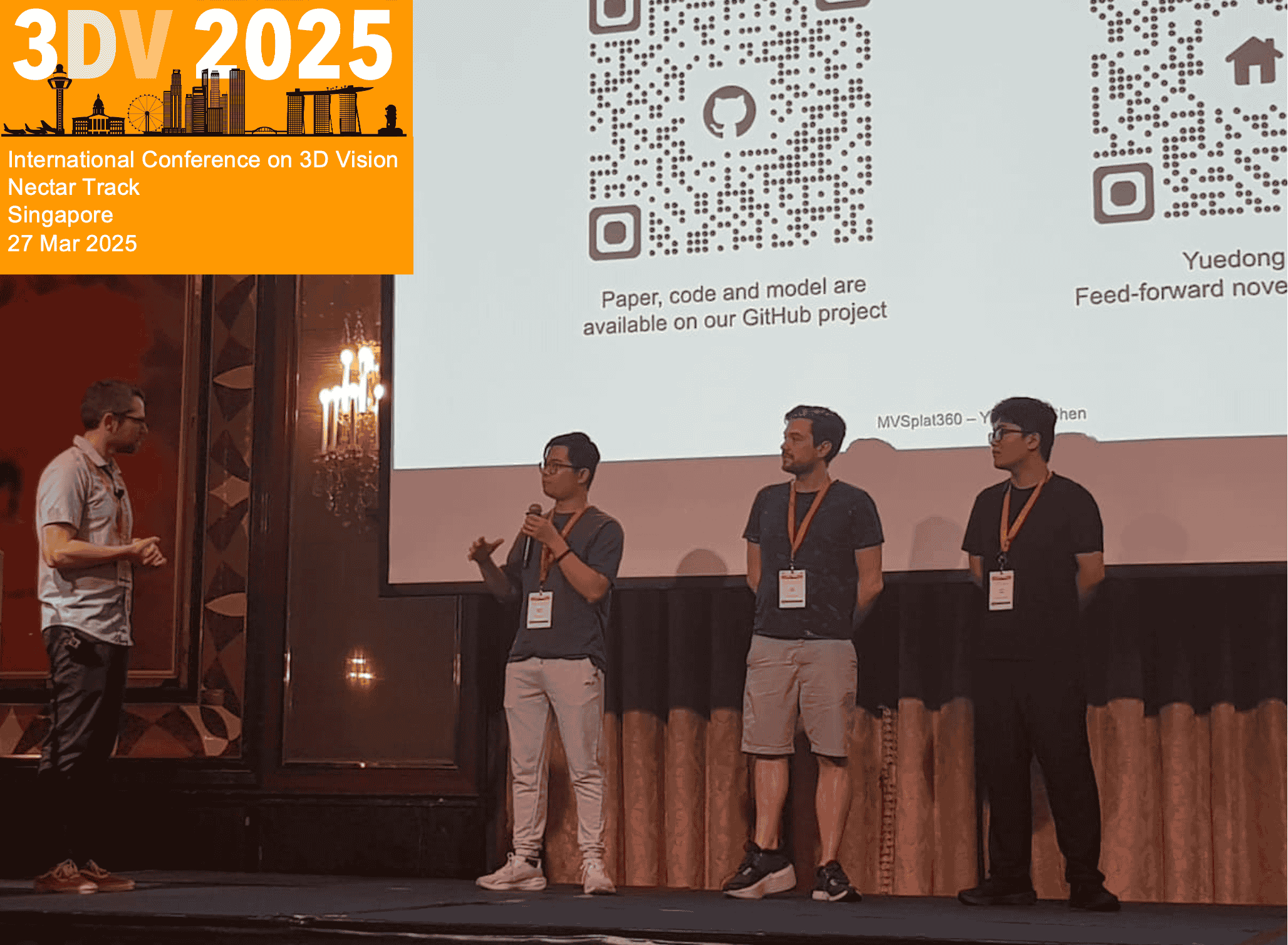

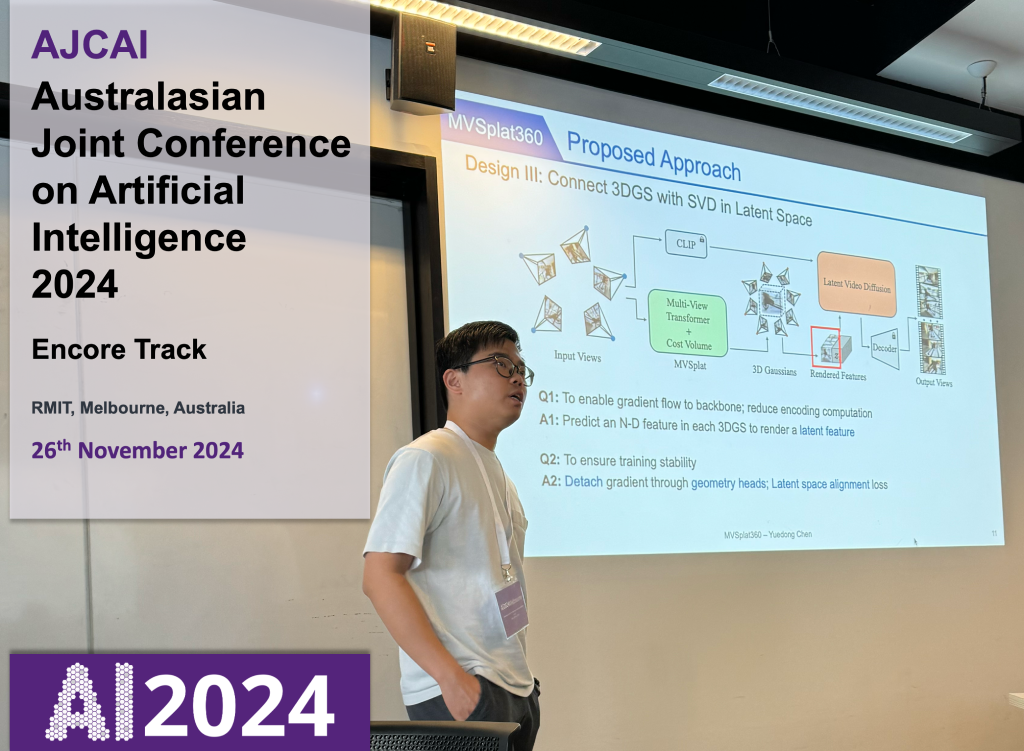

MVSplat360: Feed‑Forward 360 Scene Synthesis from Sparse Views

NeurIPS 2024

Yuedong Chen, Chuanxia Zheng, Haofei Xu, Bohan Zhuang, Andrea Vedaldi, Tat‑Jen Cham, and Jianfei Cai

MVSplat: Efficient 3D Gaussian Splatting from Sparse Multi-View Images

ECCV 2024 (Oral)

Yuedong Chen, Haofei Xu, Chuanxia Zheng, Bohan Zhuang, Marc Pollefeys, Andreas Geiger, Tat-Jen Cham, and Jianfei Cai

MuRF: Multi-Baseline Radiance Fields

CVPR 2024

Haofei Xu, Anpei Chen, Yuedong Chen, Christos Sakaridis, Yulun Zhang, Marc Pollefeys, Andreas Geiger, et al.

Sem2NeRF: Converting Single-View Semantic Masks to Neural Radiance Fields

ECCV 2022

Yuedong Chen, Qianyi Wu, Chuanxia Zheng, Tat-Jen Cham, and Jianfei Cai

Object-Compositional Neural Implicit Surfaces

ECCV 2022

Qianyi Wu, Xian Liu, Yuedong Chen, Kejie Li, Chuanxia Zheng, Jianfei Cai, and Jianmin Zheng

- 28-01-2025, Invited talk "Feed-forward NVS from Sparse Inputs" at Amazon, Tel Aviv, hosted by Lior Fritz.

- 08-11-2024, Invited talk "Feed-forward Novel View Synthesis" at Wayve, London, hosted by Joe Polin.

Oral presentation at ECCV 2024

Invited talk at SHUZIHUANYU

- Conference Reviewer: ECCV(‘24), CVPR(‘23-‘26), ICCV(‘23-‘25), NeurIPS(‘24-‘25), ICLR(‘25-‘26), ICML(‘25), 3DV(‘24-‘26), AAAI(‘24-‘26), ACMMM(‘21‑’24), ACCV(‘24), ISMAR(‘23-‘24), IEEEVR(‘24)

- Journal Reviewer: TPAMI, IJCV, TIP, TVCG, TMM, TCSVT, TOMM, TVCJ, Computers & Graphics, The Visual Computer

- Donny is a native speaker of Teochew, fluent in English, Cantonese, Mandarin, and also familiar with Singlish.

ALL OPINIONS AND VIEWS EXPRESSED ON THIS PAGE ARE SOLELY HIS OWN AND SHALL NOT BE INTERPRETED AS REPRESENTING OR IMPUTING THE VIEWS, POSITIONS, POLICIES, OR OFFICIAL COMMUNICATIONS OF HIS EMPLOYER. NO STATEMENT, COMMENT, OR MATERIAL PRESENTED HERE SHALL BE CONSTRUED AS PROFESSIONAL ADVICE OR AS ANY FORM OF AUTHORIZED ENDORSEMENT BY THE COMPANY. HE DOES NOT ENGAGE IN, NOR SHALL HE RESPOND TO, ANY INQUIRIES OR COMMUNICATIONS PERTAINING TO CONFIDENTIAL INFORMATION, INTERNAL PROJECTS, PROPRIETARY MATERIALS, OR ANY MATTERS THAT MAY AFFECT OR POTENTIALLY CONFLICT WITH THE INTERESTS OF HIS EMPLOYER. ALL REQUESTS FOR RESEARCH COOPERATION, FORMAL COLLABORATION, OR OTHER OFFICIAL ENGAGEMENTS MUST BE DIRECTED EXCLUSIVELY TO THE COMPANY THROUGH ITS DESIGNATED CHANNELS.